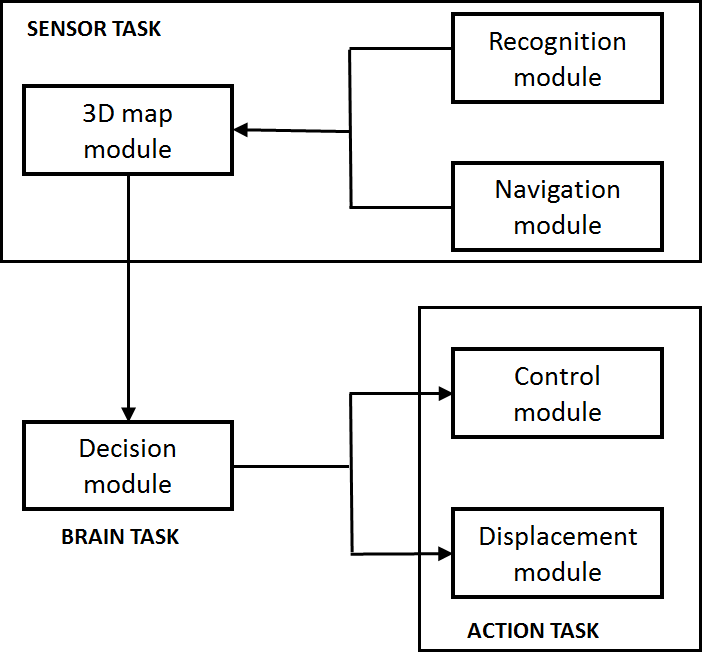

In our software architecture, three different tasks are considered: sensor task, action task and brain task. Sensor task: use the information provided by the sensors to construct a 3D map of the environment, the robot localization and the objects of interest. Action task: control the displacement of the robot and the actions needed to recover, transport and position the object of interest. Brain task: receive the information provided by the sensor task and decide the actions required to achieve the different objectives (recover objects, navigation and position objects). Each task consists of the following modules that run in parallel and communicate each other via message passing (Figure 1). In every module, there is an interface to receive data (input) and to send data (output):

Figure 1: Diagram of the software architecture

Recognition module: implements computer vision (OpenCV) and deep learning algorithms (YOLO framework (redmon,2018)). to detect and recognize the objects of interest in real-time. The output data correspond to the 3D position (X, Y, Z coordinates) of each object of interest.

Navigation module: detects the obstacles and the path of the environment where the robot must navigate.

3D map module: constructs and updates a map of the environment while simultaneously keeping track of the robot location within it. This map also includes the localization of the objects of interest.

Control module: determines the robot arm’s joint parameters that provide the desired 3D position where the object of interest is located. Real-time inverse kinematics methods are used to solve the joint angles.

Displacement module: controls the displacement of the steer motors by changing the speed and direction of the wheels. The robot will move with the target speed according to translation (X, Y) and rotation parameters.

Decision module: allows to manage and control the workflow of actions based on the information provided by the 3D map module. Decision-making strategies are implemented modeling the uncertainty in unknown environments in order to accomplish the given tasks [2].